Tobias Gockel: Data streaming at DKV Mobility - How we develop software at DKV Mobility.

Starting with the context of how our organization is built around cross functional product teams and how we are developing software at DKV, we want to describe two solutions for working with Kafka:

Management for Kafka is a tool that applies the GitOps approach1 to the Kafka cluster orchestration.

The CLI pod for Kafka allows developers to comfortably debug according to the principle of least privilege2.

- GitOps is an operational framework that takes DevOps best practices used for application development such as version control, collaboration, compliance, and CI/CD tooling, and applies them to infrastructure automation.

- A subject should be given only those privileges needed for it to complete its task.

Organizational context

The area Customer Product Services (CPS) follows a DevOps approach within cross functional, self-responsible product teams for developing digital products in the cloud for our customers. Our two core products are the integrated web portal (Cockpit) for managing service cards, toll boxes and transactions for the truck or car fleet, and the mobile app, aiming at the driver himself for navigating and paying.

Organizationally, the area consists of two departments. In the Framework Development department all teams are located that provide central services, commonly used frameworks, or data for our product teams, which are organized in the Product Development department. The product teams are cross functional, self-responsible teams focusing on the main products reflecting the corresponding business area. For example, the product team TOLL is responsible for all toll related customer facing applications. Responsible means, the product teams develop, maintain, and operate their services and application via the CI/CD toolchain provided by the platform team. This reflects the DevOps Approach, which is understood as a comprehensive responsibility of the whole team for the application without an artificial border between building and running software. The platform team in the framework development department is for instance operating the cloud infrastructure (Azure), the CI/CD toolchain (Azure DevOps), and the Kafka cluster as well as the IAM solution for our products (Keycloak).

The CPS division is committed to the key principle of

Self-enablement

- Wherever possible, product teams should be able to do everything they need on their own to avoid bottlenecks and cross-team dependencies

Scalability

- Our organizational structure as well as our infrastructure and our processes are designed and built to allow for easy growth

Security

- Our services are exposed to the internet, so we are making them as secure as possible, and we start as early as possible in the development process

Automation

- We avoid manual activities wherever possible to boost self-enablement and scalability in our processes

Motivation

While we consider our development process and our tech stack in CPS as mature, modern, and efficient, the Kafka orchestration proved to be a more or less cumbersome workflow for managing the Kafka resources, despite the good and intuitive Confluent Control Center. Owing to our access policy, developers had no access to the Confluent Control Center and for example the creation of topics was manually requested at the platform team to be then created manually in the Confluent Control Center. So this was basically the opposite of an automated, scalable process aiming at self-enablement of the product teams.

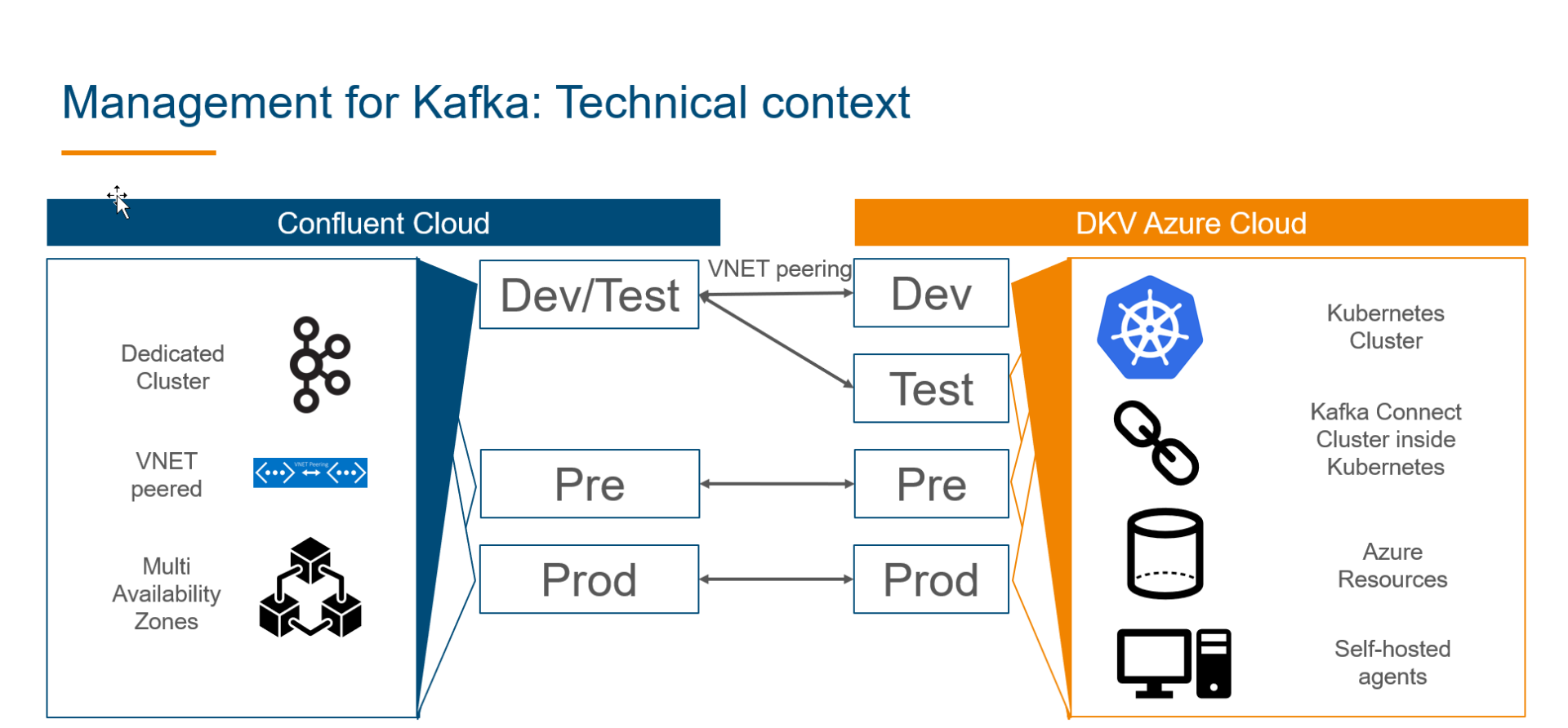

Technical context

The illustration shows the technical setup of and between our Kafka clusters and our Kubernetes clusters in Azure. On the Confluent side we are administrating three dedicated clusters that are V-NET-peered to our Kubernetes clusters. While we only have a virtual separation of our Dev and Test cluster based on prefixes, the Preprod and Prod cluster are decoupled and featuring multi availability zones. On our Azure side, all four stages have their own separated resources, as for example Kubernetes clusters, PostgreSQL servers, key vaults or storage accounts, and an individual Kafka Connect cluster inside Kubernetes for running the needed Kafka connectors. Additionally self-hosted agents are also in place.

Management for Kafka

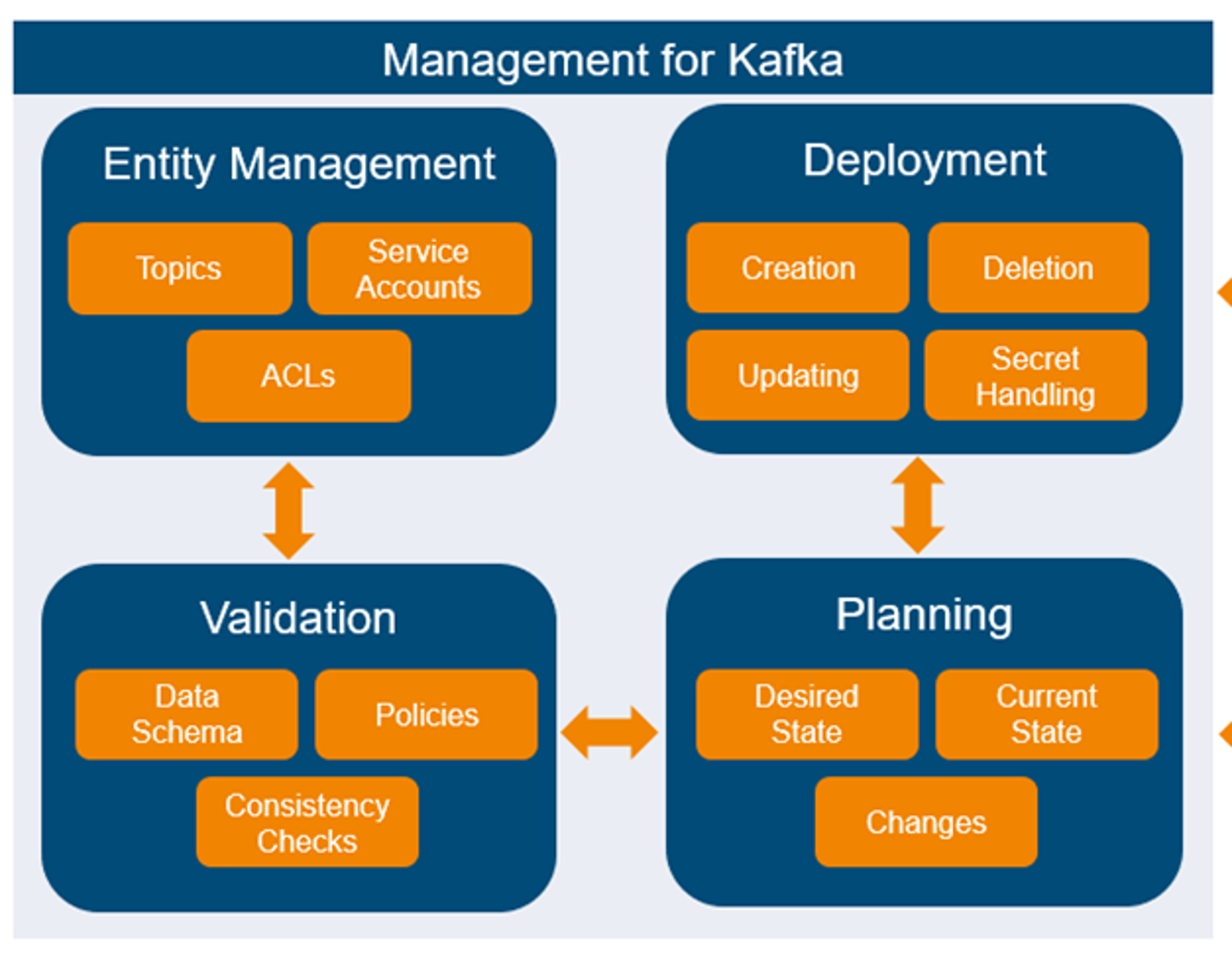

Management for Kafka is our solution to orchestrate Kafka-resources based on a GitOps approach. For that, we have mainly four components in place:

- Entity Management

- Validation

- Planning

- Deployment

Entity management

This component is responsible for the structured storing of Kafka entities (topics, service accounts, and access-control lists) and uses parameterized scripts to create, update or delete Kafka entities. Via parametrized pipelines the entity management component avoids wrong inputs, ensures compliance to policies, creates automated pull requests, is able to create multiple resources at once, and finally lowers complexity for the overall activities.

Validation

This component validates schemes of Kafka entities, e.g., if the topic configuration is written in the correct format or if the configuration contains only allowed values. Furthermore, it includes a set of customizable policies like naming conventions, required metadata or configuration restrictions for topics or service accounts. Finally, consistency checks are performed for a specific stage order or the existence of Kafka entities.

Planning

This component combines all stored Kafka entities to create a desired state, which includes the validation of entities, the existence or non-existence of topics or service accounts, the topic configurations, API keys and access control lists (ACL). This desired state is then compared to the current state and a plan with the appropriate possibly needed changes is created. All these activities are wrapped inside a parametrized pipeline.

Deployment

This component deploys the changes according to the plan via a parametrized pipeline including the needed approvals and stores the API keys automatically to the dedicated Azure key vaults of the responsible teams. The output is the actual created, deleted, or updated Kafka entity and the associated API keys.

So, with that setup we have now got a solution in place which follows our initially mentioned key principles with the additional benefit that it is also easy to use from a developer perspective.

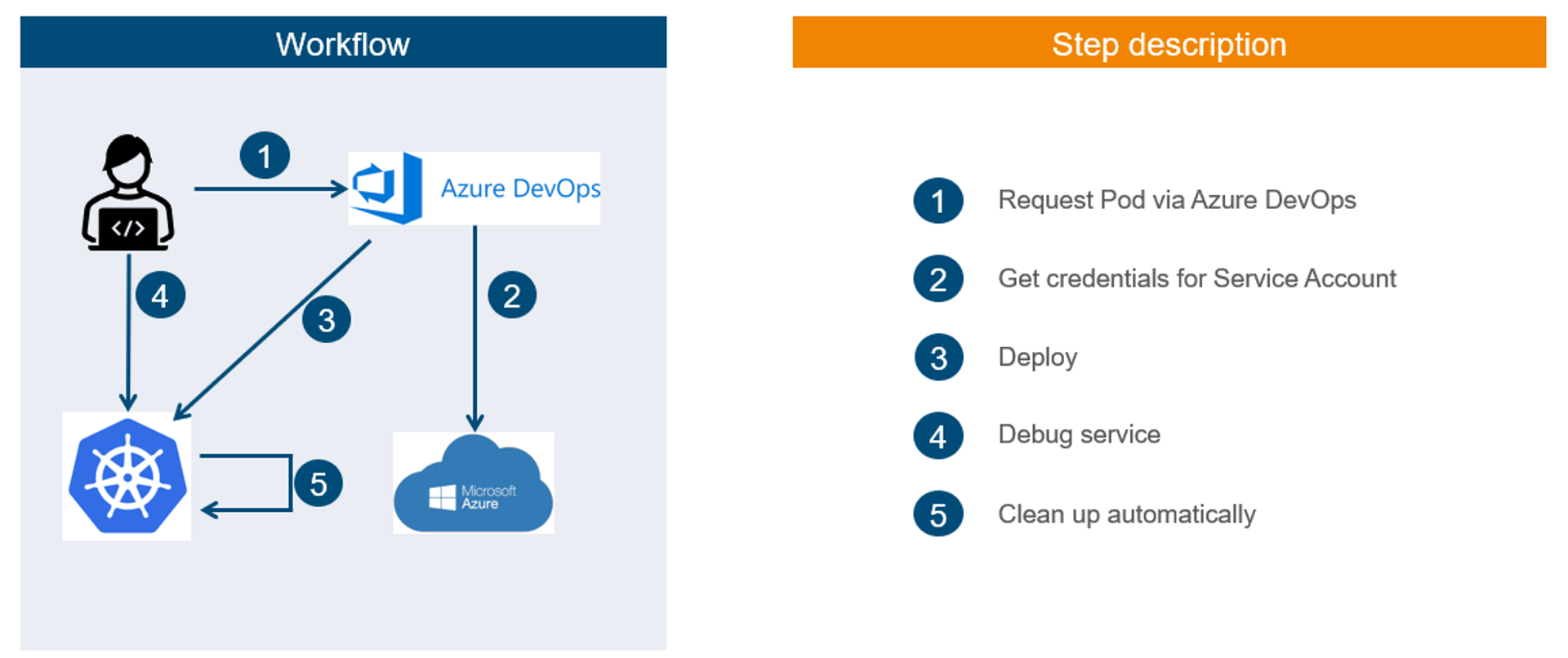

Debugging Kafka

In the context of improving our Kafka orchestration we also wanted to improve options for developers to securely debug their Kafka resources according to the principle of least privilege. So, we created a self-service in Azure DevOps for the developers to deploy a pod in our cluster that contains both the default Kafka-CLI scripts as well as customized scripts. The pod is created in a namespace which belongs to the team which owns the selected service account and the credentials of that service account are automatically mounted inside the pod. This means the developer can only use service accounts that belong to its team. The developer can then debug on behalf of the selected service account. Finally, after a dedicated time, the whole setup will be cleaned up automatically, which does not just reduce the risk of unwanted costs, but also efficiently avoids security risks.

Again, this implementation for debugging Kafka resources enables us in the CPS unit to follow our key principles of self-enablement, scalability, security and automation.

If you want to know more about the people in CPS, our projects or our tech stack, feel free to reach out to Tobias .